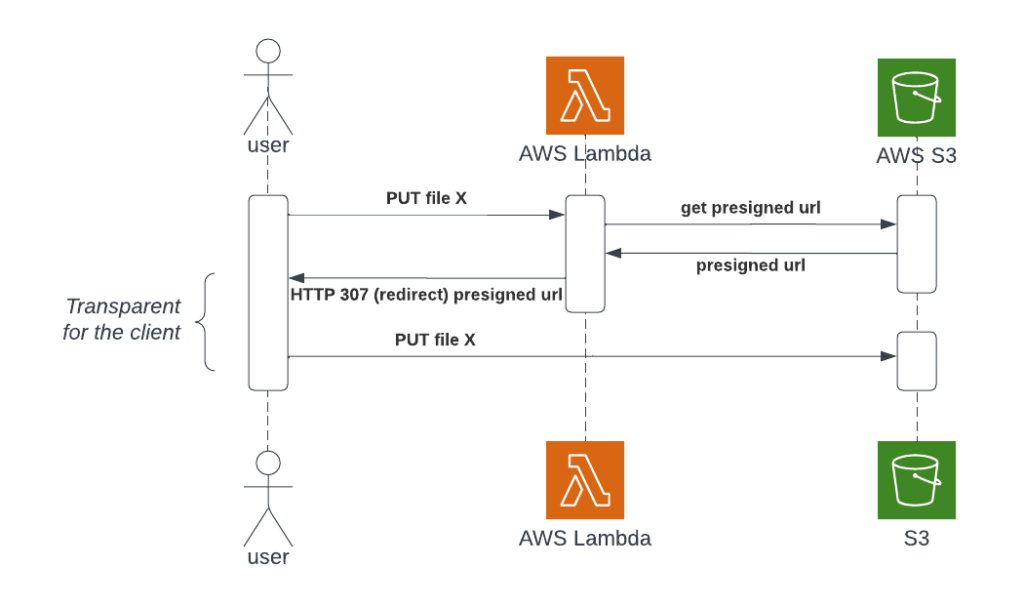

Today I’m going to present how you can build a robust file upload system through AWS S3 using an HTTP(S) endpoint and S3 presigned urls. We are going to use a Lambda function with a function URL to expose the HTTP(S) endpoint.

The idea is to provide a fixed url to our user to let him upload a file to it. This fixed url (or HTTP(S) endpoint) will be a Lambda function url. Once the lambda has been launched, the function will generate an AWS S3 presigned url and redirect the user request transparently to it. We need to use an HTTP 307 code to make the redirect working with HTTP PUT method because this specific code “guarantees that the method and the body will not be changed when the redirected request is made”.

With all this in place, our user will be able to upload a file with a simple curl : curl -LX PUT -T "/path/to/file" "LAMBDA_FUNCTION_URL".

To do all this, we’ll need to:

- create an S3 bucket

- create a Lambda Function with an HTTP(S) endpoint

- grant S3 Access to the Lambda function

- make the Lambda creating the presigned url

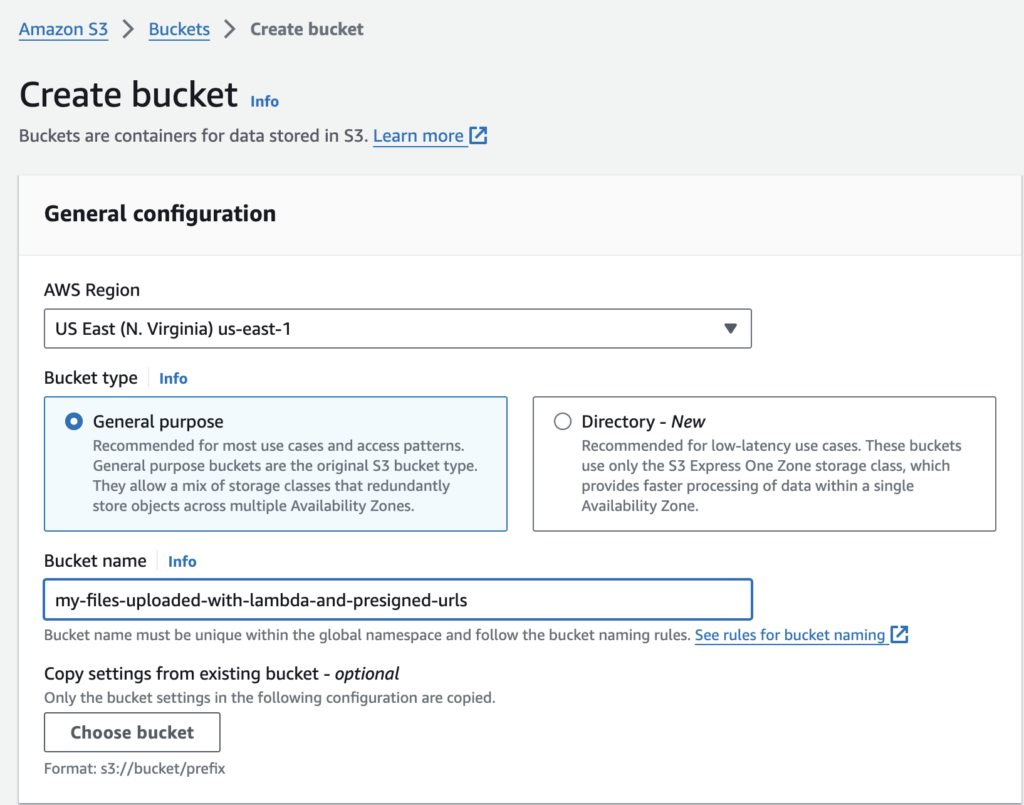

Create an AWS S3 bucket to save files

Let’s create an S3 bucket my-files-uploaded-with-lambda-and-presigned-urls :

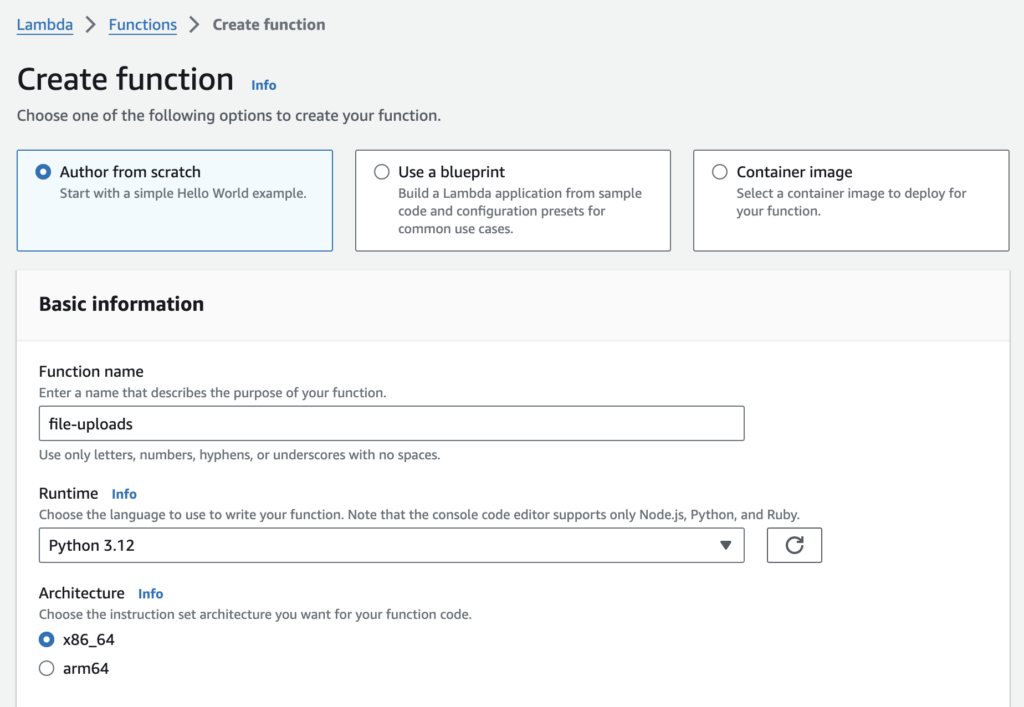

Create an AWS Lambda Function with an HTTP(S) endpoint

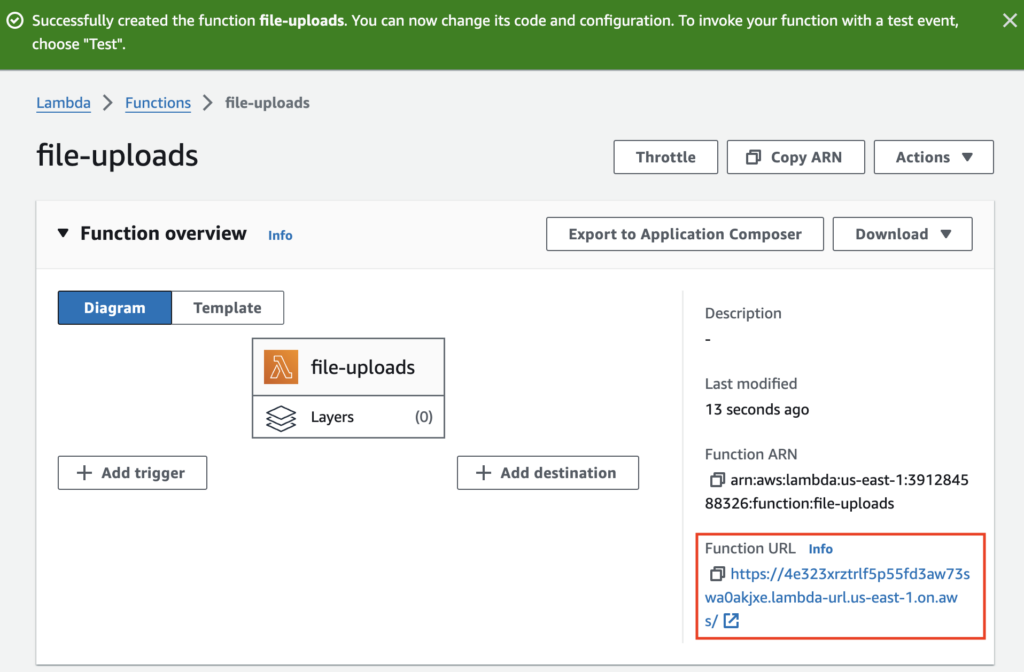

Let’s create a python AWS Lambda Function called file-uploads :

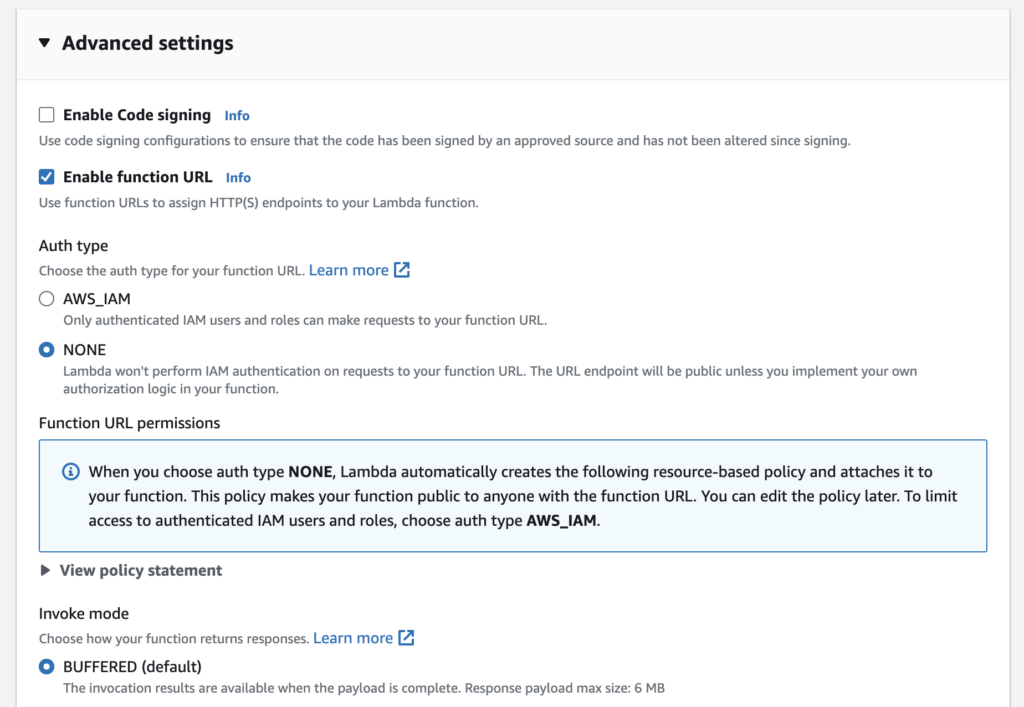

and enable function URL without authentication :

Our Lambda is now created and available on the function url :

Grant S3 Access to the Lambda function

As “a presigned URL is limited by the permissions of the user who creates it“, we have to grant our Lambda to put a file in the bucket. Let’s edit the policy of the role assumed by the lambda (role can be found in Configuration -> Permissions -> Execution role) :

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "logs:CreateLogGroup",

"Resource": "arn:aws:logs:us-east-1:ACCOUNT_ID:*"

},

{

"Effect": "Allow",

"Action": [

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource": [

"arn:aws:logs:us-east-1:ACCOUNT_ID:log-group:/aws/lambda/file-uploads:*"

]

},

{

"Effect": "Allow",

"Action": "s3:PutObject",

"Resource": [

"arn:aws:s3:::my-files-uploaded-with-lambda-and-presigned-urls",

"arn:aws:s3:::my-files-uploaded-with-lambda-and-presigned-urls/*"

]

}

]

}Make the Lambda creating the presigned url

Here is the code I used to generate the presigned url and redirect the client with a HTTP 307 to it :

import boto3

def lambda_handler(event, context):

# Generate a presigned URL for the S3 object

s3_client = boto3.client('s3')

presigned_url = s3_client.generate_presigned_url('put_object', Params={

'Bucket': 'my-files-uploaded-with-lambda-and-presigned-urls',

'Key': 'my_upload.txt',

"Expires": 3600

}, HttpMethod="put")

# Build the redirect response

response = {

'statusCode': 307, # HTTP status code for PUT redirect

'headers': {

'Location': presigned_url # Set the Location header to the presigned URL

}

}

# Return the response to trigger the redirect

return response

Upload a file

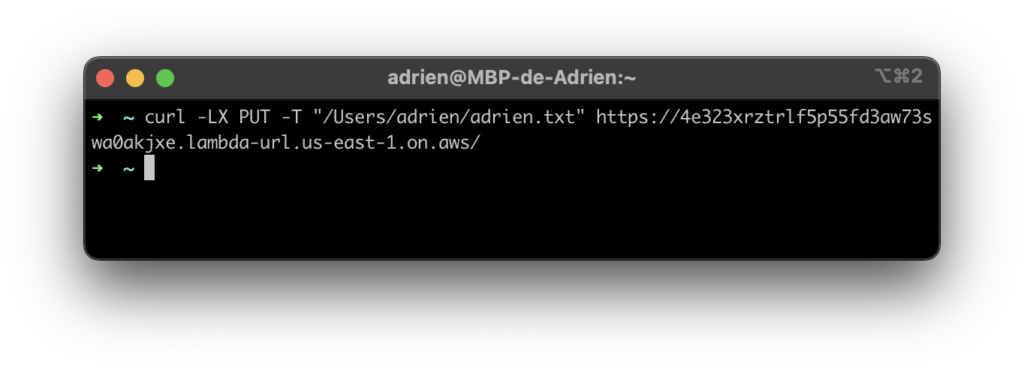

Let’s test uploading a file with a simple curl request curl -LX PUT -T "/path/to/file" "LAMBDA_FUNCTION_URL"

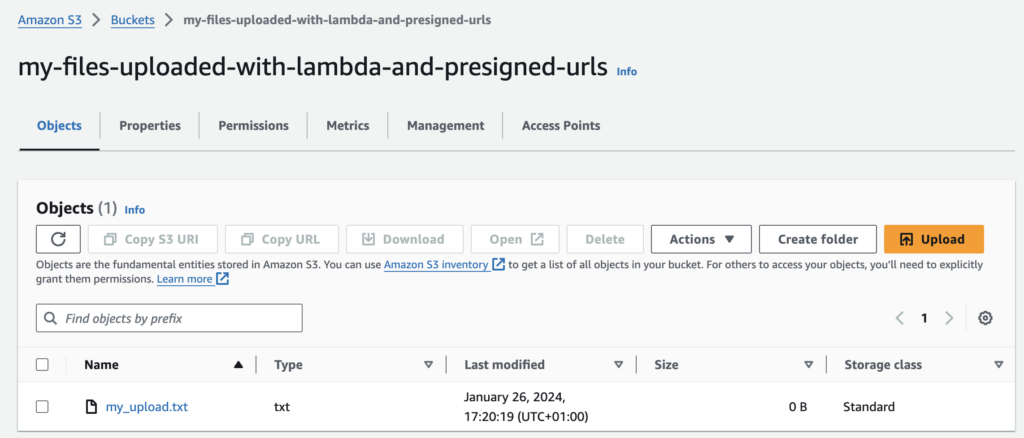

We can see the file in the bucket :

Well done 🥳

To go further

This article gives a simple overview of what can be done with lambda and S3 presigned urls. Of course, we could also add an authentication layer to prevent anyone from uploading to the URL with Cognito and/or API Gateway. You could also use a custom url with your custom domain name with API Gateway.The naming of the uploaded file could also be more elaborate.

Woow this is amazing !!

Can’t wait to test it 🤓